Technical SEO is often referred to as “the most important part of SEO until it isn’t,” and the reason for this is that we frequently hear that search engine crawling and indexing are the bottlenecks for websites. However, if they cannot crawl and index your pages correctly, then it doesn’t matter what you do otherwise.

Furthermore, as AI-driven search is becoming increasingly faster, technical SEO is becoming increasingly essential. This article is packed with prioritized checklists, quick wins, and unique tips for optimizing your site for AI-powered search engines.

TL;DR Summary

- Ensure that there is nothing that prevents your main pages from being indexed.

- If you have any broken URLs, redirect them to the content that is most similar to regain the link equity you have lost.

- Use internal linking not only to improve your site’s structure but also to obtain more SEO benefits.

- Enhance Core Web Vitals and overall page speed to ensure a positive visitor experience on your website.

- Use XML sitemaps and robots.txt correctly to make life easier for search crawlers.

What is Technical SEO?

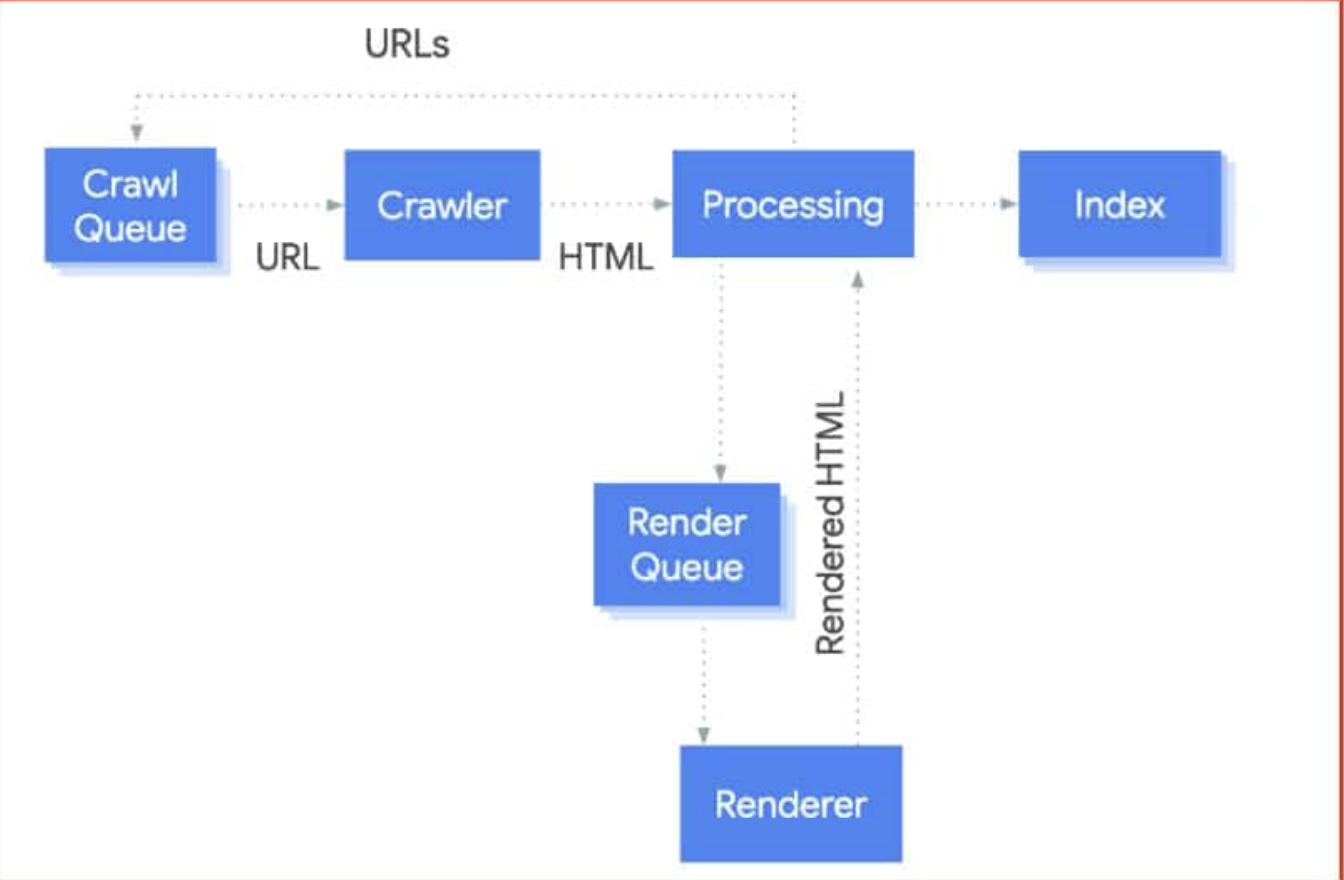

Technical SEO is the setup that helps the search engines carry out their work effectively. The tasks are: crawling, rendering, indexing, ranking, and finally, user clicks are redirected to your web pages. You can take the search engines’ approach by viewing them as a route: step one is crawling your site, then rendering your content for better understanding, and finally adding the pages to their index.

Ranking pages and choosing which one gets the clicks is done only after that. Even the best content or backlinks won’t get noticed if there is no sound technical SEO.

In fact, 68% of online experiences begin with a search engine, a core part of technical SEO. Additionally, since 53% of mobile users abandon sites that take longer than 3 seconds to load, optimizing performance is crucial.

What Matters Most in Technical SEO?

By only focusing on the most critical factors, the effectiveness of your website’s SEO can be increased substantially:

- Indexability: Search engines must be able to index the pages so that they can understand the content and, therefore, index them. Without that, your content will not be found in search results.

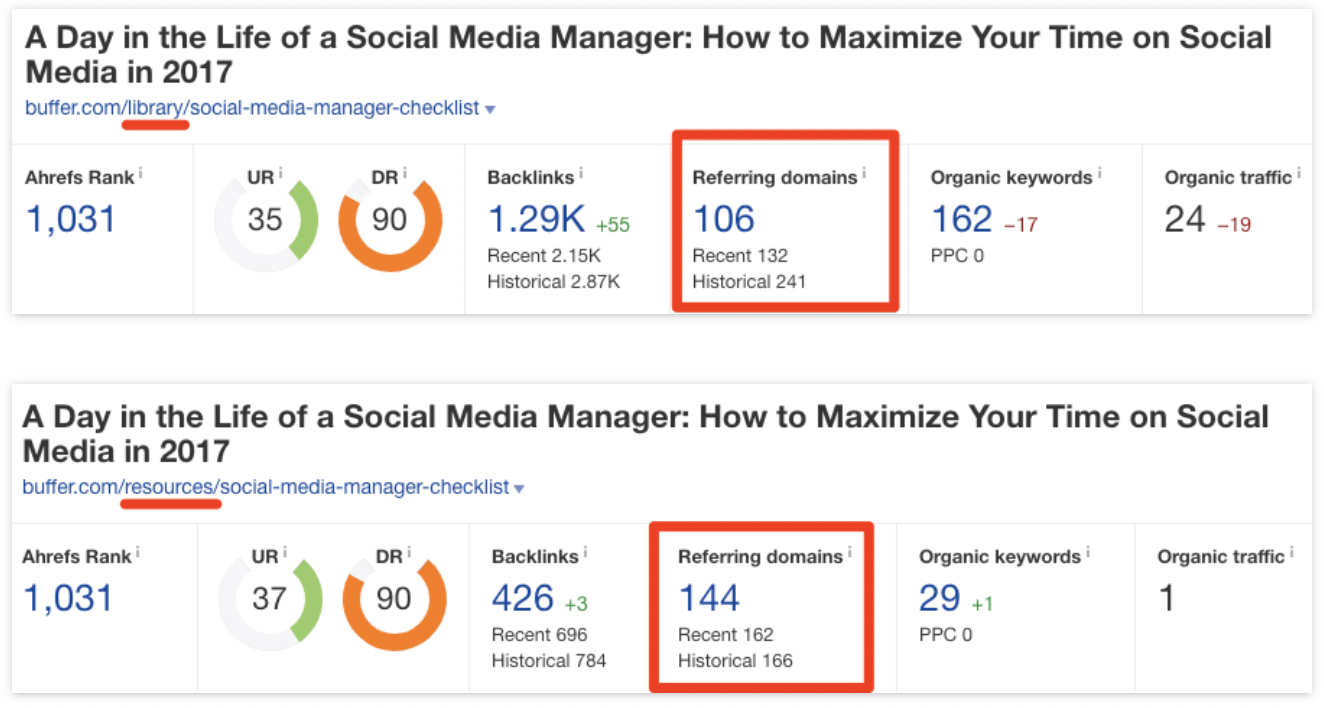

- Links (including reclaimed links): As links remain a significant factor in ranking, reclaiming lost backlinks should be on top of your SEO quick wins list.

- Internal Linking: Adequate internal linking structure not only helps crawlers find new pages but also evenly distributes your site’s ranking signals.

- Speed (Core Web Vitals & INP): A fast loading time and a comfortable interaction are the key factors that make visitors return, and what Google’s algorithms reward.

- Additional technical features, such as structured data, sitemaps, and robots.txt, are in place to support these priorities and ensure long-term SEO health.

This approach ensures your site is ready not only for traditional search engines but also for an evolving AI search landscape, which increasingly relies on accessible HTML content and efficient crawl paths.

Audit Framework (HubSpot’s 5 Buckets, Expanded)

An efficient technical SEO audit is structurally grounded. The five technical aspects of the HubSpot framework — Crawlability, Indexability, Renderability, Rankability, and Clickability — refer to the ways search engines access, understand, and reward a website. Every category works to ensure that the net’s groundwork is strong enough to support the overall SEO work and to achieve higher ranks in the search results.

Crawlability

Crawlability is a measure of the extent to which search engines can find and navigate through your site in an automated manner. First of all, keep XML sitemaps correct, best if they are in your root directory, and separate them by type (e.g., news, image, or video) in case your site has more than 50,000 URLs. A plan with sound architecture and minimal depth from clicks will make pages more accessible, and crawl support will be enhanced.

The robots.txt file should serve to close the doors to irrelevant parts (for example, staging or admin folders) while keeping the doors open to primary assets, such as CSS and JavaScript files.

Crawl Budget Management is the lifeblood of big websites. Every crawl request is a resource-consuming action; thus, you have to lead Googlebot cautiously:

- Prevent duplicate content from wasting crawl budget by using canonical tags.

- Adjust URL parameters in Google Search Console to inform Google which parameters are important.

- Remove outdated or low-quality content to give search engines more time to crawl your most valuable content.

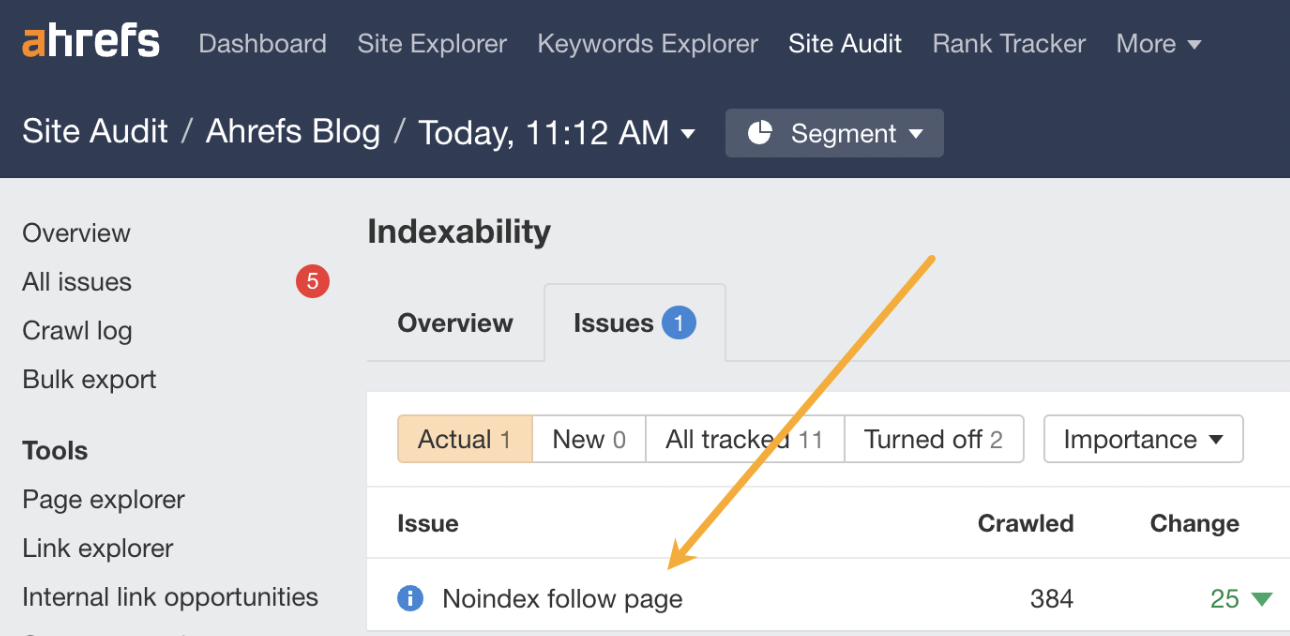

Indexability

The pages that are crawlable may not be indexable. Technical Search Engine Optimization ensures that your website’s most relevant pages are indexed and assigned a ranking.

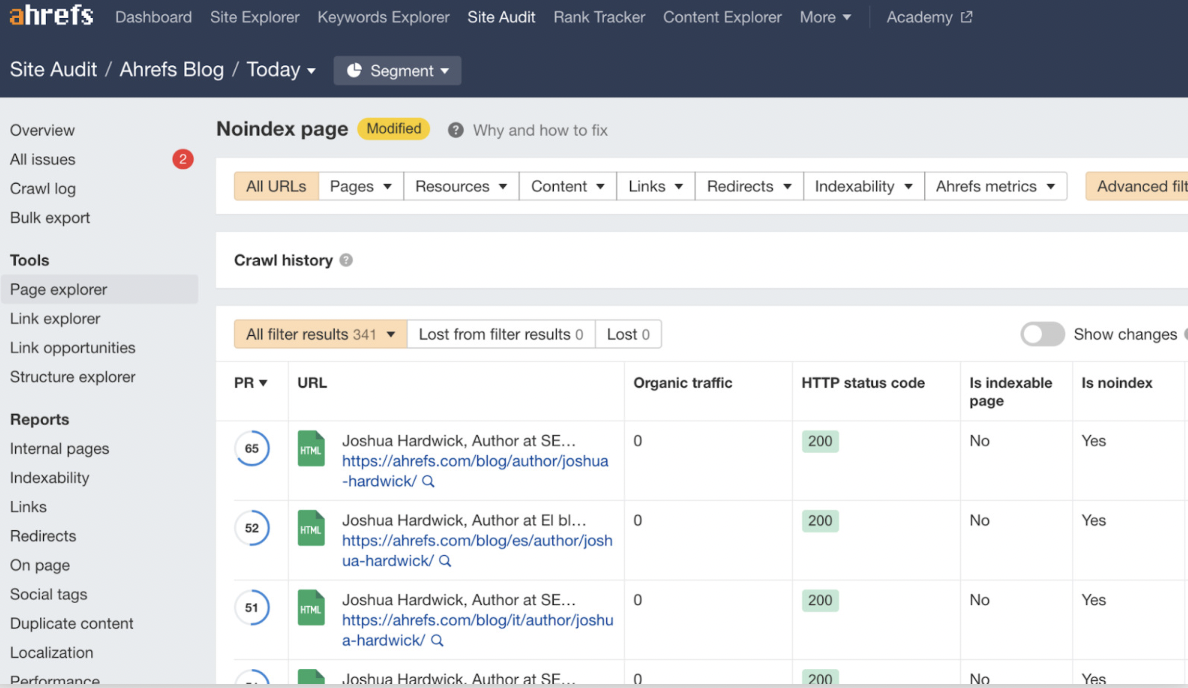

Take a look at your canonization plan; your main URLs should have their own canonicals. References to canonicals and content versions should have cross-references to show a clear structure. Correct meta robot tags (noindex, nofollow) should be used with utmost care to avoid the problem of incorrect issuance, which often occurs due to CMS plugins or template defaults.

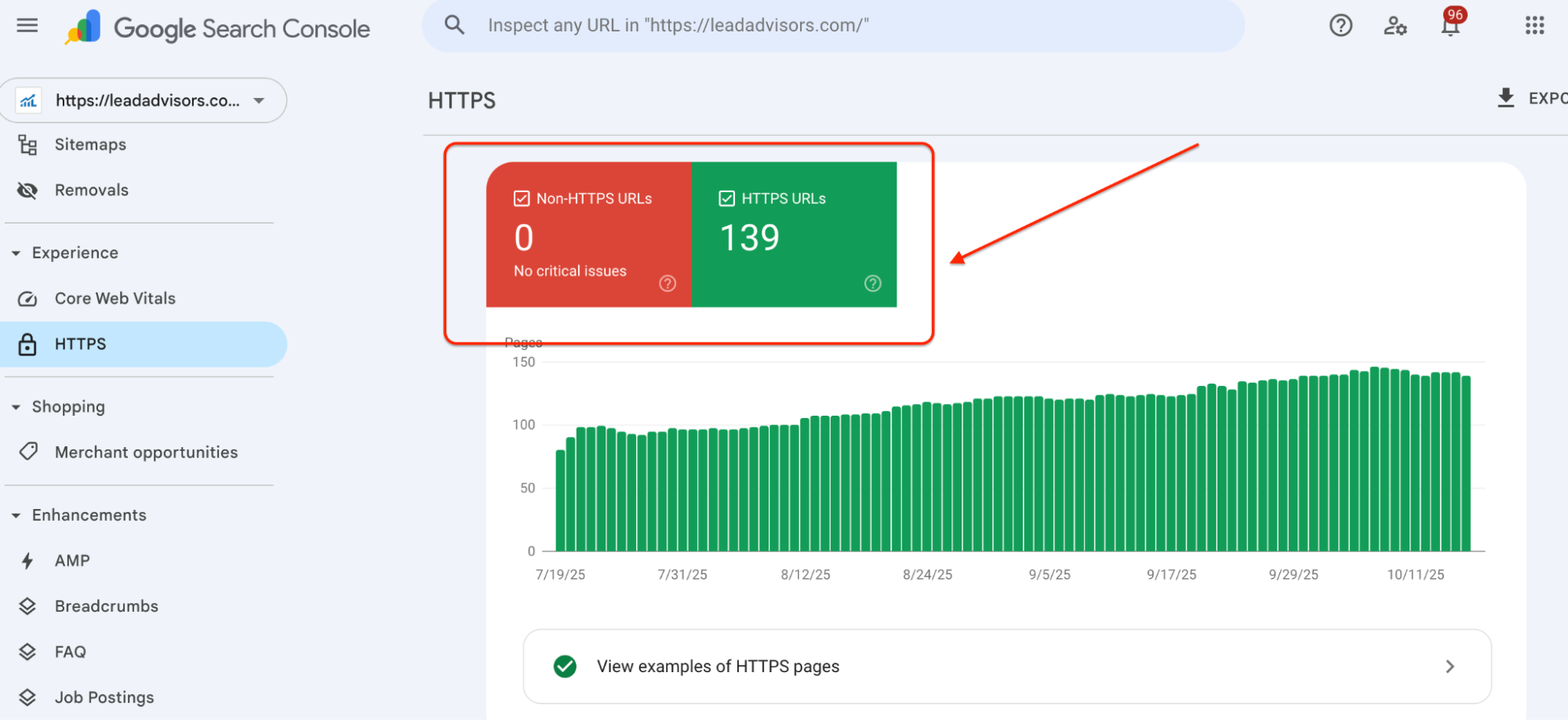

Have a preferred domain (www vs. non-www) and support HTTPS for both consistency and trust.

Keep in mind: a page blocked by robots.txt can still be indexed if it’s linked from other places. In such cases, noindex meta tags are the ones that really get rid of the index.

Renderability (Accessibility for Bots)

Renderability indicates the extent to which your content is loaded and how much it helps search engines determine the page’s relevance. Be sure to optimize server response times, cut down page size, and free critical HTML from dependence on JavaScript rendering. The reason is that AI-driven crawlers and lightweight bots might not be able to execute JavaScript.

If you are using libraries like React, consider employing Server-Side Rendering (SSR) or prerendering for the most important pages. Besides that, work on the assumption that not only desktop but also mobile users will need access to key navigation paths, which should therefore be HTML-based links that both can use without interruption.

Don’t let orphaned pages become a permanent fixture. Establishing a sound internal linking system is crucial for ensuring that no content is left behind.

Rankability (Technical Levers)

After ensuring that your site is accessible to crawlers and can be indexed, the next step is to focus on the factors that directly influence search engine ranking. Among these factors, the most potent tool is probably internal linking; doing it right means using descriptive, keyword-rich anchors and linking related pages together to create topical clusters. This not only creates more SEO relevance but also improves the user experience.

Redirect chains and loops should be got rid of so that link equity is maintained, and link authority from old or broken URLs can be regained with 301 redirects. Minor technical tweaks such as these usually give results much faster than external link, building campaigns.

Clickability

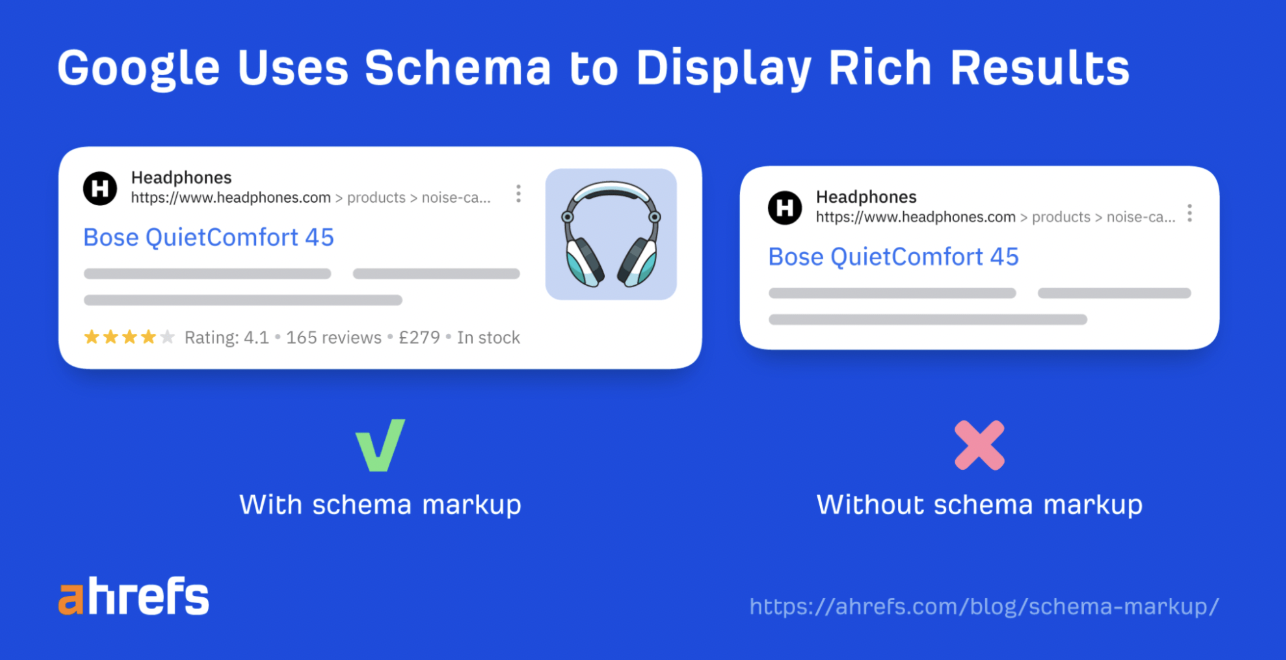

This means that even if your index is perfect, you still need to appear on the results page. Implement structured data tailored to each page type, such as articles, FAQs, and products, to enhance your SERP appearance.

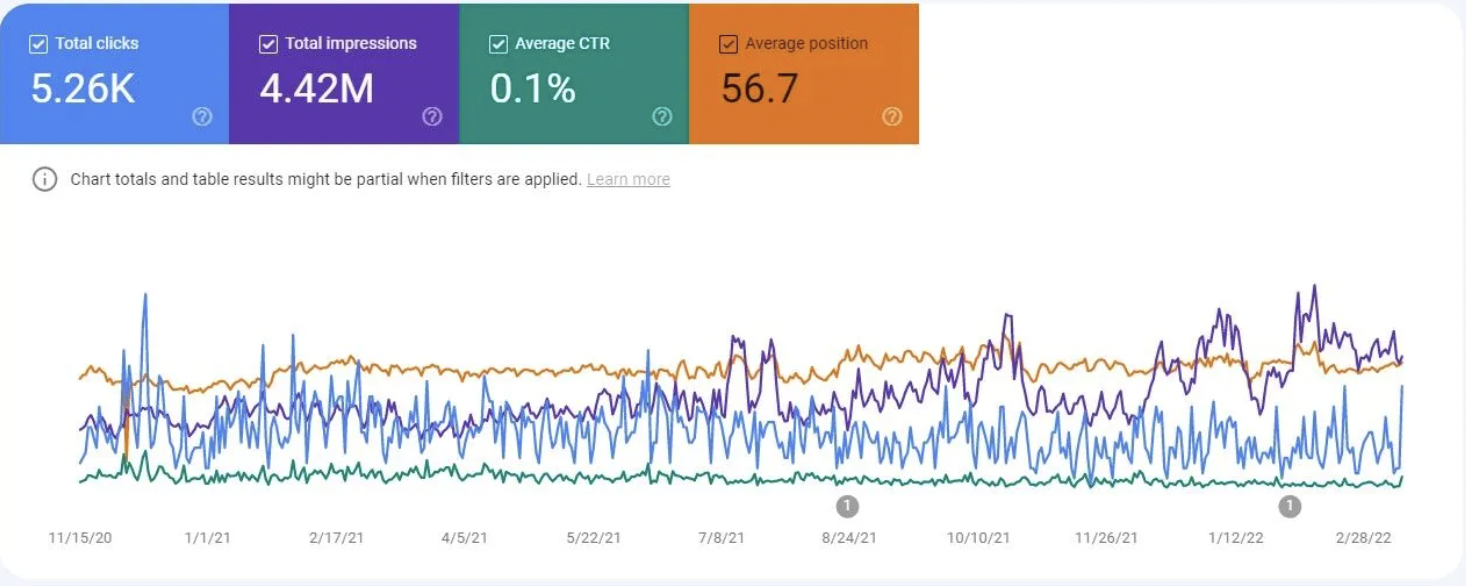

Use Google Search Console to monitor your performance and facilitate the decision-making process on which snippets to optimize for more clicks.

Prepare your content to be chosen as a featured snippet or other SERP feature by providing succinct and precise answers to the most critical questions. The main points to focus on if you want to be found via Google Discover are freshness, visual engagement, and topic depth, which are indicators that enable your content to appear in ever-changing feeds on mobile devices.

Quick Wins (Ahrefs Emphasis)

It is not the case that every technical SEO improvement needs a full-scale rebuilding of a website. Some changes can yield visible results in search engine results and rankings quite quickly. These “quick wins” focus on crawl efficiency, link equity, and user experience —the basics that are at the core of technical SEO and are indispensable for achieving higher search engine rankings.

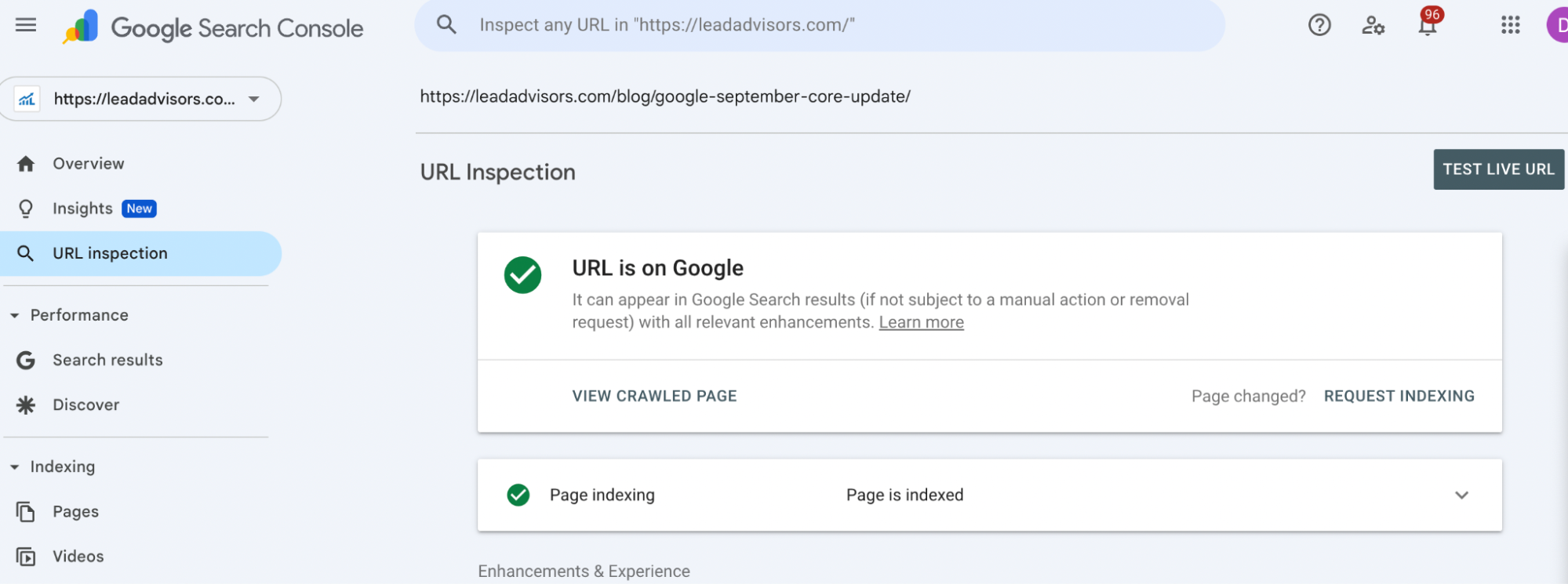

Verify Indexation of Money Pages

One of the first things you must do is check whether your most valuable web pages, e.g., service or product pages, have been indexed correctly.

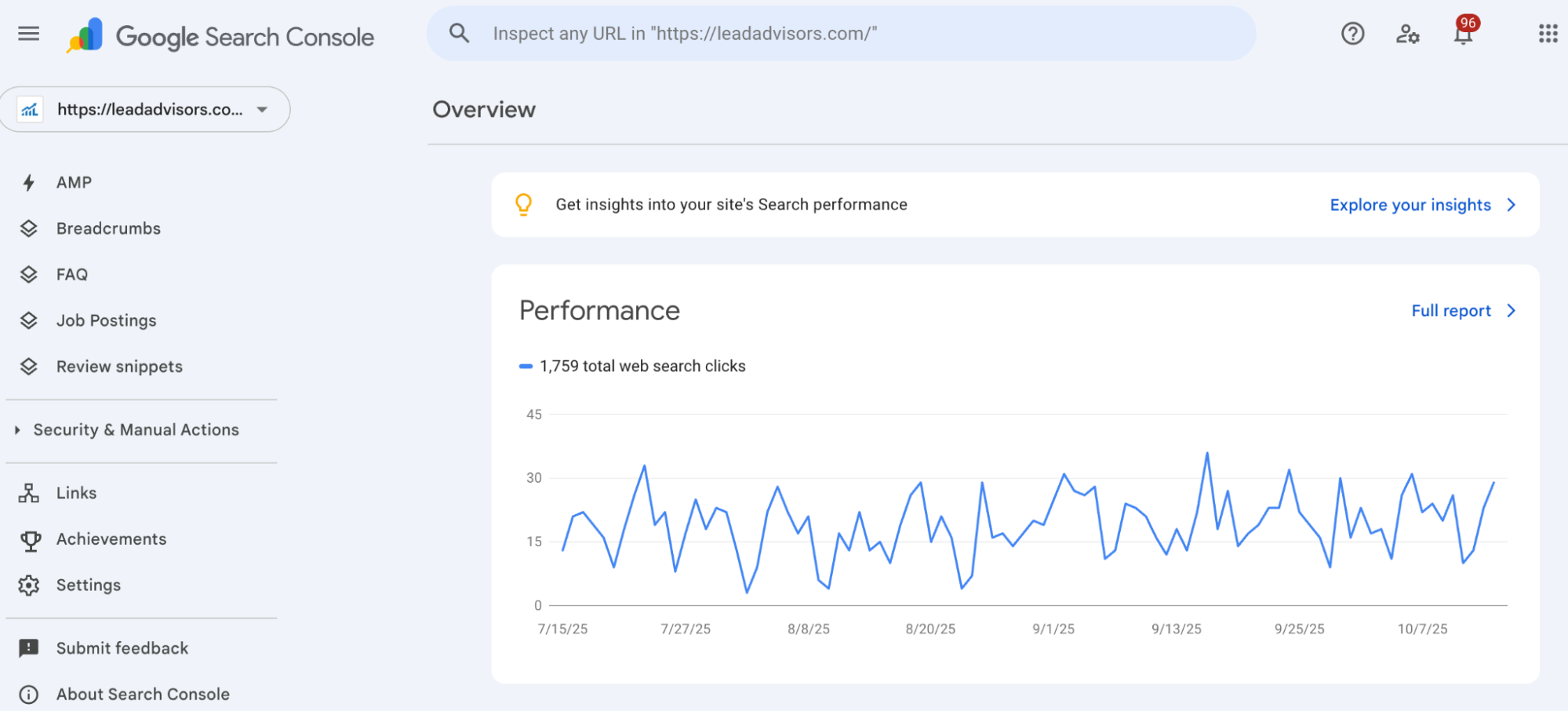

Confirm with Google Search Console’s URL Inspection tool and Ahrefs Site Audit which pages are live, canonicalized, and indexed.

Additionally, ensure that the money pages are included in the XML sitemaps and that no duplicates or outdated URLs are present. This is the step that sorts out the pages that are not only relevant but also visible and accessible to the crawlers.

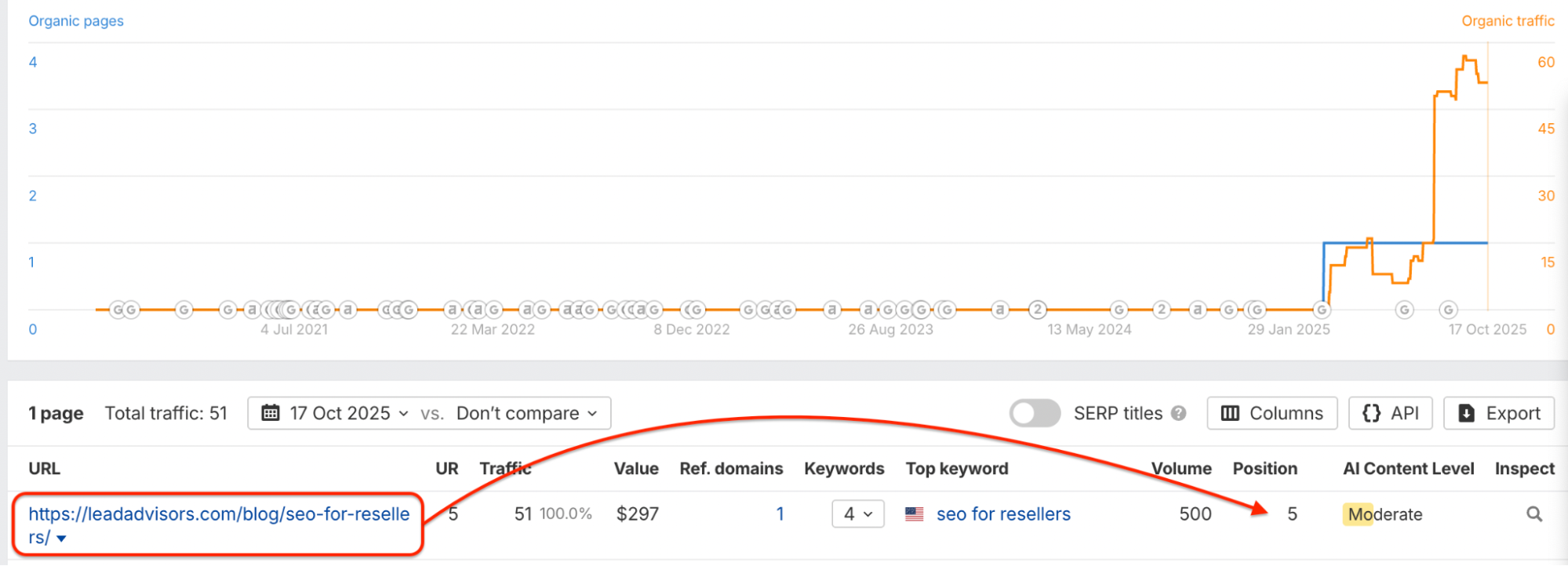

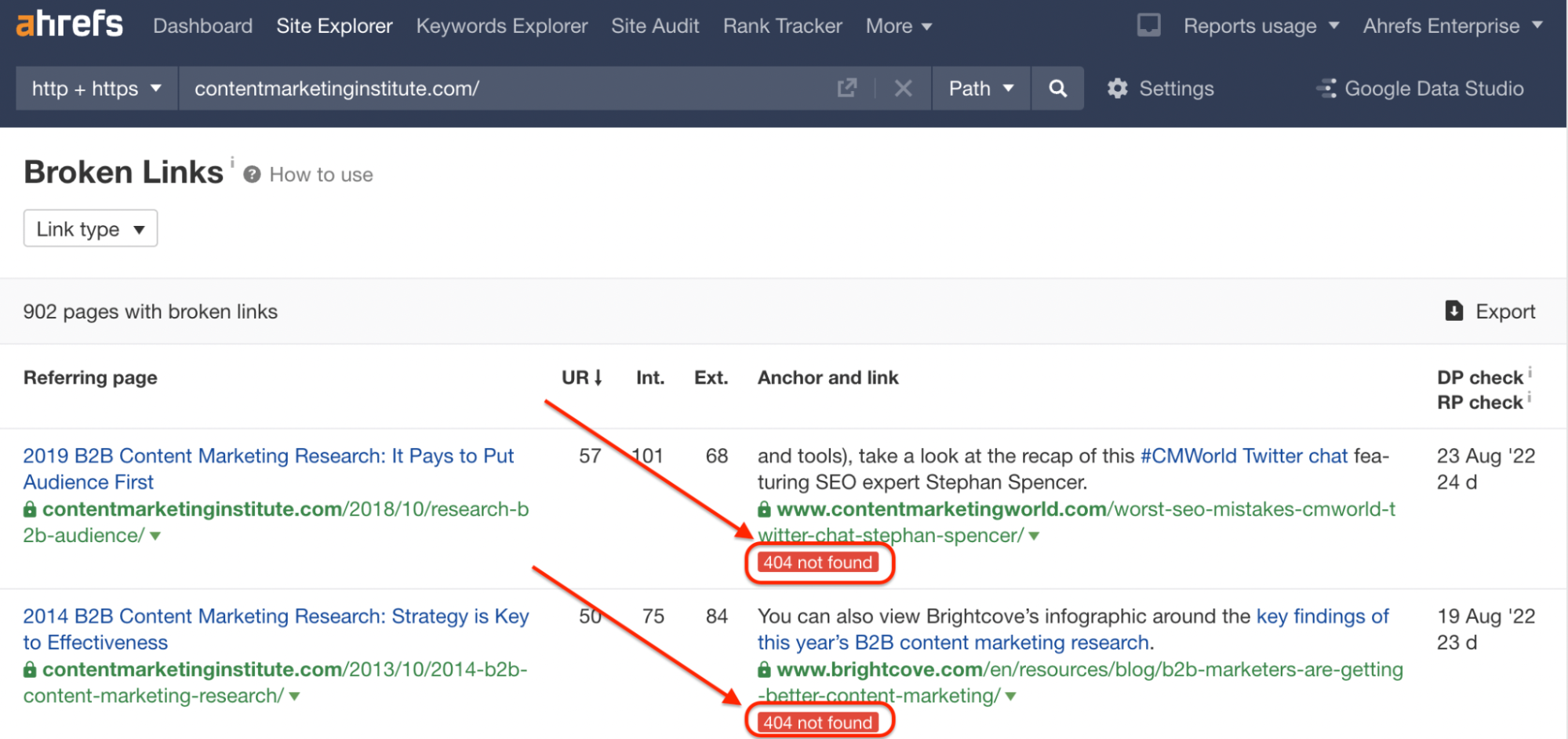

Reclaim Lost Links

With the help of Ahrefs’ “Best by Links” and “Broken Backlinks” reports, locate the 404s with referring domains (RDs). Use 301 redirects to redirect those dead pages to their active counterparts.

| Issue | Quick Fix |

| 404 with backlinks | 301 redirect to updated or closest URL |

| Removed or outdated URL | Restore old content or redirect to the related category page |

This single action can quickly restore lost authority and recover link equity.

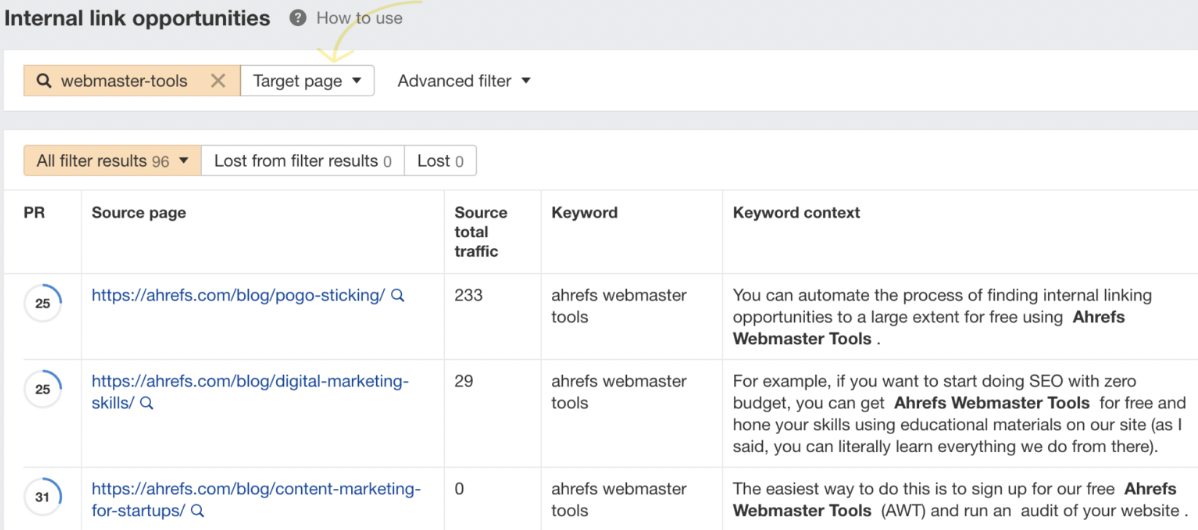

Add High-Value Internal Links

The best way to identify linking opportunities between top-performing and weaker content is to conduct a sitewide crawl using Ahrefs or Screaming Frog.

Move internal links from authority articles to relevant pages with the help of natural anchor text.

These connections deepen topical clusters, facilitate a more efficient distribution of PageRank, and direct users to the primary conversion areas, thereby increasing the brand’s visibility in search engine results.

Fix Top Broken Pages & Links

Locate broken links (4xx/5xx errors) through your auditing tools and change them at the source.

The process of fixing them facilitates crawl flow, maintains user experience, and contributes to core web vitals by reducing the number of unnecessary requests. When modifying internal links, ensure that you always link to the most recent and relevant live URL, rather than simply removing broken references.

One-Click Wins

Several fixes deliver instant impact with minimal effort:

- Redirect all HTTP traffic to HTTPS

- Make one single domain version (www/non, www) from duplicates (www/non, www)

- Remove staging URLs or discard variants that are no longer in use.

- Publishing new XML sitemaps to let the search engines know the latest changes.

- Confirming that the structured data meets the requirements by using Google Rich Results Test so that the page can get the.

Just these quick fixes can be of great help in ensuring that a website is technically consistent and has good off-page SEO signals, which is the

Site Architecture & Navigation

Without a doubt, fantastic architecture is the core of technical SEO. It is primarily responsible for enabling users and crawlers to navigate through your site and for facilitating the transfer of link equity from one page to another. A well-structured format enables better crawlability, a more user-friendly experience, and higher search engine rankings.

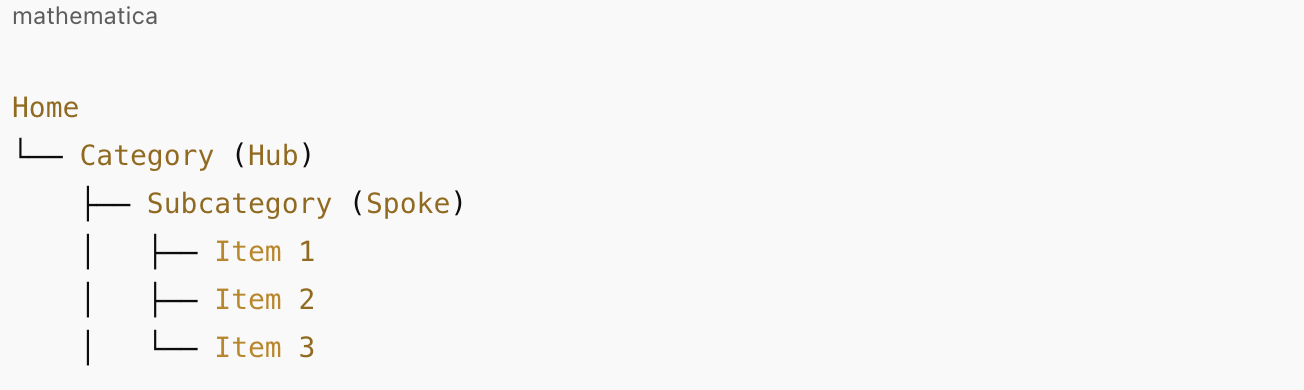

Hub-and-Spoke Structure

Consider your website as a hub (categories) and spoke (subcategories or items) network.

This model organizes content in a way that both users and search engines can easily understand the context and the relationships between them.

Example structure:

- Make sure that you can reach every page with three clicks from the main page.

- Also, each hub must contain internal links to its subpages and related articles.

- On these links use keyword, relevant anchor text for SEO clarity and navigation flow.

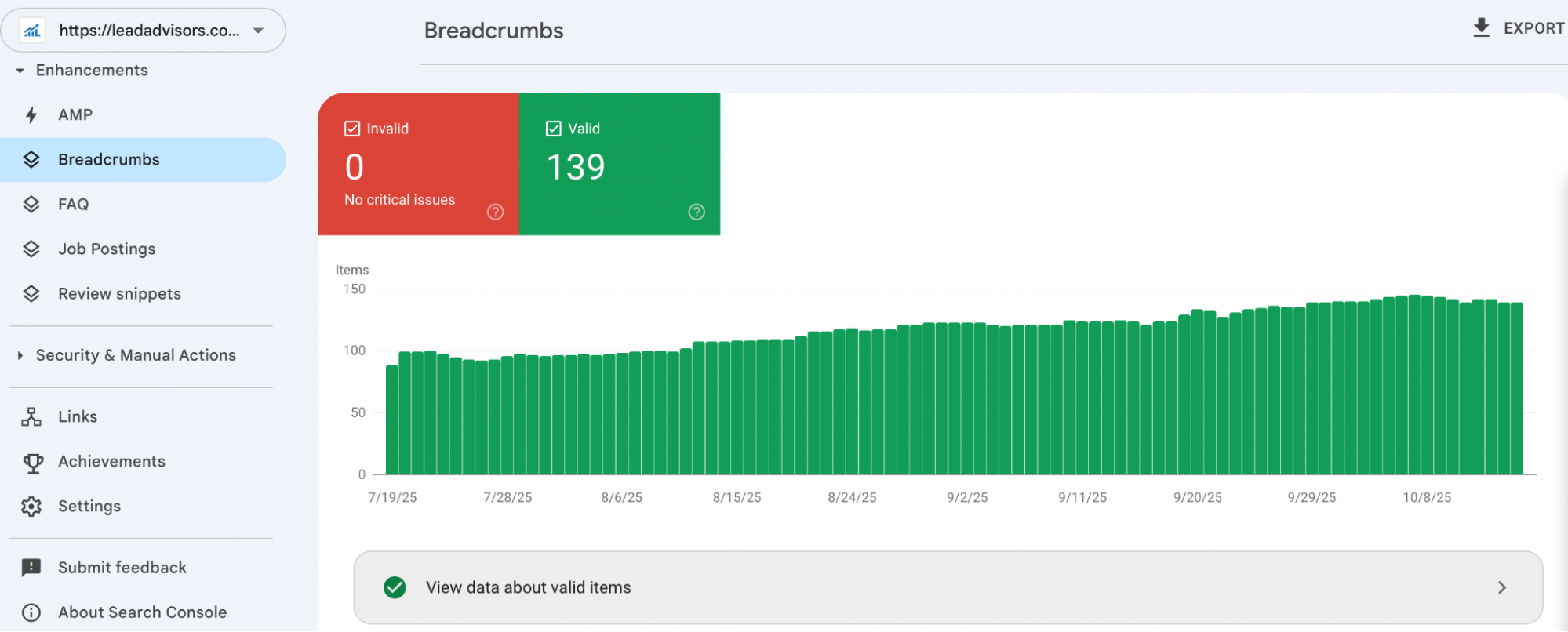

Breadcrumbs (UX + Schema)

Breadcrumbs not only help users and search engines navigate, but also index, which is wise. In fact, they provide a navigational aid to visitors through your content hierarchy and structural hints to search engines.

Switch to breadcrumb schema markup to utilize organized data and display more helpful information in the search engine results.

Best practices:

- Put breadcrumbs close to the top of the page.

- Coordinate breadcrumb ways with your site hierarchy.

- Use structured data (BreadcrumbList) to improve visibility in rich results.

Pagination Best Practices

If you have lengthy lists or grids of products, you should implement pagination instead of using an infinite scroll. By doing so, all pages will be easily accessible not only to crawlers but also to users who want to navigate through the site, and proper link distribution will be maintained.

Note:

rel=”prev” and rel=”next” are no longer indexing signals in Google, but you can still use them for accessibility and discovery.

Tips:

- Ensure that each paginated page is accessible to crawlers and has unique content (do not use identical titles/meta for different pages).

- Provide links to “Page 1” and “Next” so that navigation is easy.

- Use the canonical link element to point to the main paginated sequence if necessary..

Faceted Navigation & Parameters (GAP to Cover)

Faceted navigation, including filters, sorting, or tags, is definitely a great user experience feature, but nonetheless, if not handled well, it could lead to a complete crawl disorder for your site. In case the parameters are not configured properly, this might result in duplicate content, limited crawl budget, and weak ranking signals.

Parameter Management Checklist:

- top by robots.txt low, value or infinite combinations of filters.

- Add noindex attribute to thin or redundant facet pages.

- Implement canonical tags that point to the main version.

- Set parameter handling rules in Google Search Console.

- Link internally only to those filtered versions which are valuable.

The main point: Keeping pages easily accessible while not giving crawlers a headache with too many parameter variations.

Sitemaps & Robots Control

Whereas both XML sitemaps and robots.txt file are instrumental in depicting how your website is accessed by search engines, they are, in fact, the nerve center of your technical SEO framework that work hand, in, hand to facilitate or restrict search engine crawlers from accessing certain areas of your website.

XML Sitemaps

The main function of an XML sitemap that is neat and accurate is search engines discover efficient new URLs. Make sure the inclusion is purposeful, it should be indexable, canonical pages that are a reflection of your live site structure.

Best practices:

- Always keep lastmod values accurate to show recent changes.

- Do not rely argumentatively on <priority> or <changefreq> attributes; Google hardly ever uses them.

- Divide hefty sitemaps per content type (e.g., /blog, /products/ videos) or if you have over 50, 000 URLs or 50MB.

- Use a sitemap index file to organize the location of all sitemap subsets.

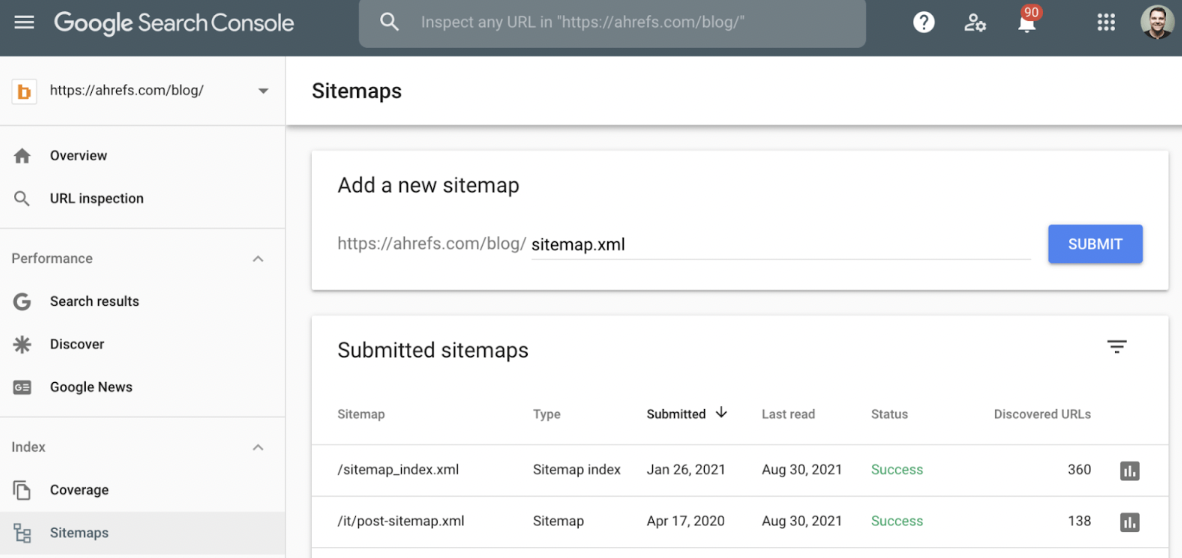

Submitting & Monitoring in Google Search Console

Ensure that all your working sitemaps are submitted to Google Search Console (GSC). Always keep the checks on:

- Errors in Indexing or pages not indexed

- Unexpected 404s or redirected URLs

- Coverage problems due to canonical mismatches

Be sure to revise your sitemaps in line with your content and URL changes, in particular, after a massive site migration.

Robots.txt

What the robots.txt file does is, it shows the different parts of your site that are off, limit to a crawler. It is necessary for the control of the crawl without any negative impact on the visibility.

Do:

- Disallow the exclusion of irrelevant paths, such as the /admin/, /cart/, /checkout/ directories, or internal search results.

- Keep the needed key assets (CSS, JS, images) for a full render open to all.

Don’t:

- Prevent essential directories or files that are needed for loading and displaying pages..

- Don’t employ the use of robots.txt for hiding pages that are not meant to be in the search results, noindex is the correct way.

GAP TO COVER: Blocking or Allowing AI Crawlers

With the increase in the number of AI, driven crawlers, it is better to make up your mind early as to what on your policy will be. Some companies give the green light to AI bots (like ChatGPT or Perplexity) with the view to gaining visibility and facilitating content distribution, while others disallow them in an attempt to safeguard proprietary data.

Trade-off overview:

| Option | Benefit | Risk |

| Allow AI crawlers | Increases content exposure and citation potential | May lead to content reuse or data scraping |

| Block AI crawlers | Preserves data control and originality | Reduces reach and brand mentions |

Pick them according to your content objectives, and openly communicate policies in your robots.txt file by using User-agent directives.

The XML sitemap, together with the robots.txt file, is a tool that a website uses to strike a balance between openness and control. Keeping up-to-date maps, checking your site with Google Search Console, and defining unambiguous crawl directives are essential ways to ensure your site stays optimized, protected, and promotable, which are the main benefits of technical SEO for long-term performance.

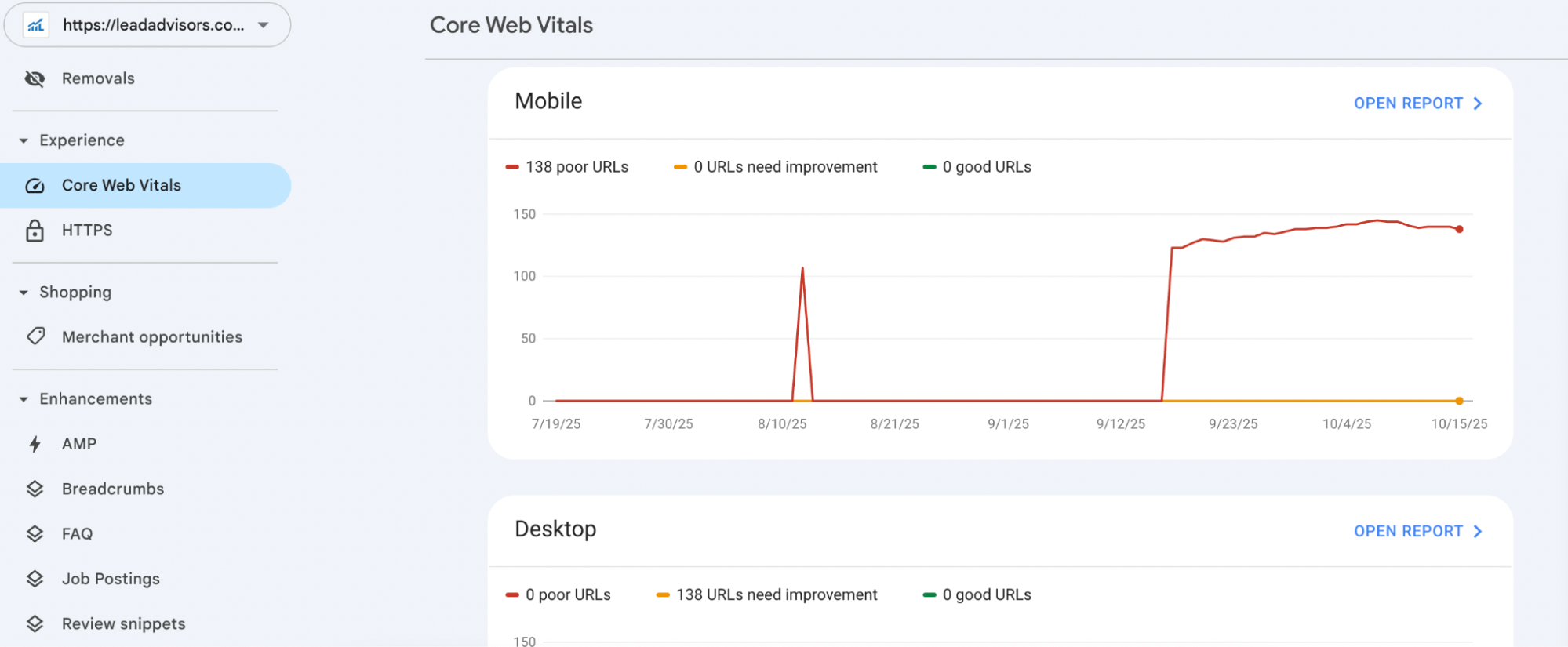

Core Web Vitals & Page Experience (AIOSEO + Semrush, Updated)

A fast, stable, and responsive site isn’t just a ranking factor—it’s a trust factor. Modern technical SEO practices emphasize real-world performance metrics that directly influence how users and search engine algorithms perceive your pages.

Optimizing page experience is a key reason why technical SEO is essential for maintaining visibility and engagement.

Core Web Vitals in 2025

Google’s latest Core Web Vitals benchmarks remain the backbone of page experience scoring. These metrics now include INP (Interaction to Next Paint), replacing FID as the standard for measuring interactivity.

| Metric | Threshold | Meaning |

| LCP ≤ 2.5s | Largest Contentful Paint | Measures loading performance |

| CLS ≤ 0.1 | Cumulative Layout Shift | Tracks visual stability |

| INP ≤ 200ms | Interaction to Next Paint | Evaluates responsiveness |

Always use field data when available, as it reflects actual user experiences rather than synthetic tests.

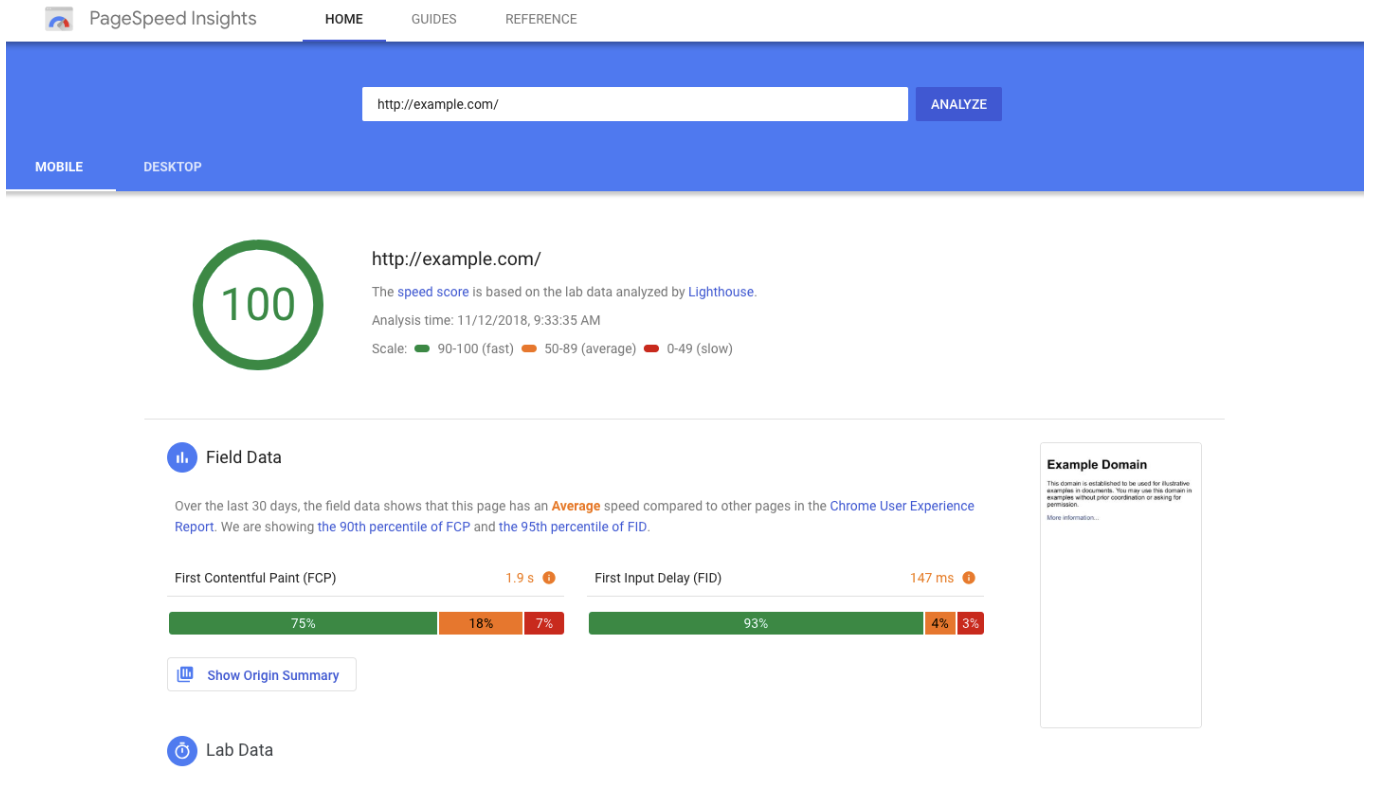

PageSpeed Optimization Actions

Improving these scores requires focused, technical adjustments. Tools like AIOSEO and Semrush Site Audit can highlight bottlenecks and opportunities.

Essential optimizations:

- Compress and serve images in next-gen formats (WebP/AVIF).

- Implement lazy loading for below-the-fold media.

- Minify and defer CSS/JS to reduce render-blocking.

- Inline critical CSS for faster first paint.

- Use a CDN to serve static assets closer to users.

- Set strong cache-control headers for repeat visits.

These technical SEO focuses directly impact both user satisfaction and ranking potential.

Mobile Friendliness & Interstitials Policy

Page experience extends beyond speed. Ensure your layout is mobile-responsive, touch-friendly, and easy to read across all devices.

Avoid intrusive interstitials (pop-ups, overlays) that obscure content or disrupt navigation, as they can prevent search engines from fully evaluating your content and frustrate users.

Also, verify that mobile resources—images, fonts, and scripts—aren’t blocked in your robots.txt file. Proper access enables search engines to render the page accurately during mobile-first indexing.

Monitoring & Continuous Tracking

Use multiple tools for accurate diagnostics and ongoing performance tracking:

- Google Search Console (CWV report): Monitors field data from real Chrome users.

- PageSpeed Insights: Compares lab and field results to identify specific layout or render issues.

- AIOSEO + Semrush dashboards: Offer cumulative health scoring and issue prioritization.

Regular reviews ensure performance gains persist after content updates or design changes, reducing the risk of regressions or hidden duplicate content issues.

HTTPS, Security & Versions

Security and consistency are non-negotiable pillars of technical SEO. HTTPS is more than just encryption—it’s a ranking signal, a trust factor, and a technical safeguard that strengthens how search engines interpret and prioritize your site.

SSL/TLS: Why It Still Matters

Migrating to HTTPS protects user data through SSL/TLS encryption and signals authenticity to both users and search engine algorithms.

It also prevents tampering or injection of malicious content by third parties—maintaining integrity across every web page.

Benefits of HTTPS:

- Slight but confirmed ranking boost

- Increased user confidence and conversion rate

- Secured data transfer between browser and server

- Required for modern browser features (e.g., geolocation, payments, push notifications)

Migration Checklist

A poorly planned HTTPS migration can result in duplicate indexing, duplicate content, or loss of link equity. Use a structured process to ensure a smooth transition:

HTTPS Migration Checklist

- Map all old HTTP URLs to their HTTPS counterparts (301 redirects).

- Update all canonical tags to reference HTTPS.

- Adjust hreflang attributes to point to secure versions.

- Regenerate and resubmit XML sitemaps with HTTPS URLs.

- Review and update your robots.txt file to disallow paths as needed.

- Reverify site ownership in Google Search Console and Bing Webmaster Tools.

- Monitor redirects and coverage reports to catch indexing errors.

Keeping redirects concise and consistent across all URL variations helps maintain link equity and prevent crawl waste.

Single Canonical Hostname & Protocol Enforcement

To avoid duplication and index fragmentation, enforce a single canonical hostname and protocol version (either https://www.example.com or https://example.com, but not both).

Set permanent (301) redirects from all alternate forms—HTTP, non-www, or trailing-slash variants—to your primary version. Confirm this setup in GSC using the “Inspect URL” tool to verify that Google recognizes only your canonical target.

A consistent host and protocol not only eliminates confusion but also consolidates signals for stronger authority and improved search engine rankings.

Duplicate Content & Canonicals (Including eCommerce)

Managing duplicate content is one of the most overlooked yet critical aspects of technical SEO. It affects how search engines crawl, interpret, and consolidate ranking signals across similar URLs. Proper canonicalization ensures that authority flows to the intended web page, avoiding dilution and confusion in indexing.

Common Sources of Duplication

Duplicate or near-duplicate URLs often arise unintentionally, especially in large sites and eCommerce setups.

Typical sources include:

- URL parameters (sorting, filters, tracking)

- UTM tags or campaign URLs

- Printer-friendly or “share” versions of the same page

- Pagination or session-based IDs

- Near-duplicate product or category pages

If unmanaged, these variations can create multiple versions of the same content, wasting crawl budget and confusing search engine algorithms.

Canonical Tag Patterns

Canonical tags indicate which URL should be considered the primary version. Use them consistently across all pages.

Best practices:

- Always include self-referencing canonicals on key pages.

- Use cross-domain canonicals when syndicating content or mirroring catalog data.

- Verify canonical directives in Google Search Console under “Inspect URL” to ensure they’re respected.

In eCommerce setups, set product or category canonicals at the cleanest, most representative URL—usually without parameters or tracking tags.

When to 301 vs. Canonical vs. Noindex

Each duplication scenario calls for a different solution depending on purpose and permanence:

| Situation | Best Option | Why |

| Page permanently moved or replaced | 301 Redirect | Transfers link equity and deindexes the old URL |

| Temporary duplicate (e.g., filters or near-dupes) | Canonical Tag | Consolidates ranking signals while keeping variations accessible |

| Page needed for users but not search visibility | Noindex | Keeps content usable without competing in SERPs |

Choosing the right method prevents unnecessary deindexation or crawl waste while preserving equity flow.

GAP TO COVER: Product Variants & Pagination

Product variations—color, size, or material—commonly generate multiple URLs for the same item.

Use a rel=canonical from variant pages to the main product version, unless unique attributes (like different descriptions or media) justify indexing.

For paginated product listings:

- Canonical each page to itself (not page 1).

- Link to “View All” pages only when load speed allows.

- Combine canonical logic with user-friendly, descriptive URLs that match filter intent.

This hybrid approach prevents duplicate content issues while maintaining strong UX and SEO signals.

Effective canonicalization protects crawl efficiency, consolidates authority, and preserves index integrity—core outcomes that show why technical SEO is essential for scalable site health.

By combining correct use of canonicals, redirects, and noindex tags, your site remains clean, crawlable, and optimized for both users and search engines.

Structured Data (Schema) for Visibility & Entities

Structured data bridges content and context. It helps search engines interpret meaning, display enhanced results, and associate your brand or authors with topics. When implemented correctly, schema markup boosts visibility in search engine results and strengthens entity relationships that feed knowledge graphs and AI summaries.

Schema Types That Matter by Template

Each page template should include schema types aligned with its intent.

Below is a reference for high-impact markups:

| Template | Recommended Schema Types |

| Homepage / About | Organization, LocalBusiness |

| Blog / News Post | Article or NewsArticle, BreadcrumbList |

| Product Page | Product, Offer, AggregateRating, Review |

| FAQ Page | FAQPage |

| How-to Guide | HowTo |

| Video Page | VideoObject |

| Event Page | Event |

| Job Posting | JobPosting |

| Category / Navigation Page | BreadcrumbList |

Implement JSON-LD format wherever possible (Google’s preferred method) and ensure that schema aligns with the visible on-page content.

Quality Checks & Validation

Not all schema fields are required, but missing key attributes can prevent rich results.

Follow these best practices for quality and consistency:

- Always include required and recommended properties (e.g., headline, author, datePublished).

- Test markup using Google’s Rich Results Test and Schema.org’s validator before deployment.

- Monitor Search Console > Enhancements for warnings or invalid item errors.

- Avoid duplicating or nesting conflicting schema types (e.g., two Article tags on one page).

Proper validation ensures schema enhances, not confuses, crawler understanding.

Entity SEO: Connecting Brand, Authors & Topics

Schema isn’t just for presentation—it’s for identity.

Use entity-based markup to connect your site with recognized profiles and data sources.

Implementation tips:

- Use the Organization and Person schema to represent your brand and authors.

- Add ‘sameAs’ attributes linking to verified profiles (e.g., LinkedIn, Crunchbase, Wikipedia, or company listings).

- Reference your primary Knowledge Graph entities where possible to build authority and trust.

- For brands, embed consistent NAP (Name, Address, Phone) details in both schema and on-page content.

This approach improves topical association, which helps search engines build stronger entity connections between your brand, its content, and key subject areas.

Maintenance & Deployment Control

Schema must evolve as templates or site structures change.

Adopt a simple versioning and QA process:

- Maintain schema snippets in your CMS or tag manager with clear version history.

- Validate new or updated code in staging environments before production.

- Periodically re-run Rich Results and Schema.org tests after theme or CMS updates.

- Document all schema changes in your audit logs or version control system (e.g., Git, changelog file).

Proactive maintenance ensures that schema updates never break rendering or introduce invalid markup.

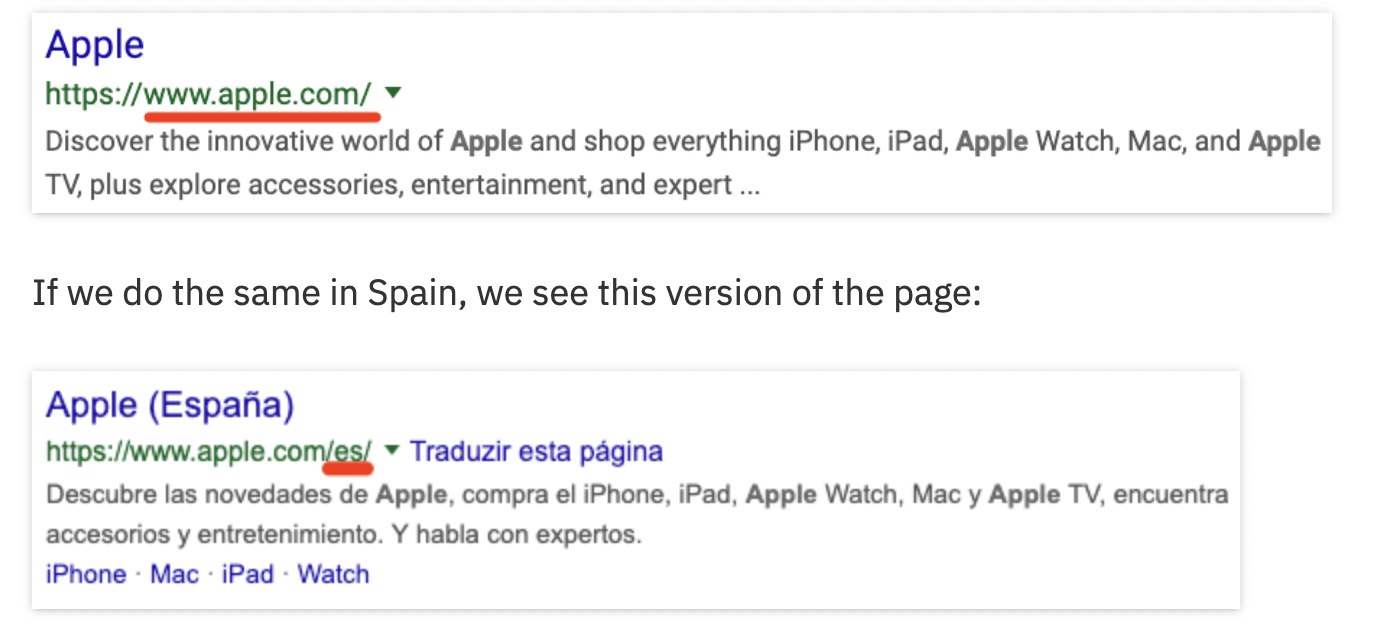

Internationalization (Hreflang Without Tears)

Global visibility depends on serving the right content to the right audience. Hreflang annotations tell search engines which language or regional version of a page to show, preventing duplicate indexing issues across multilingual or multi-regional sites. When implemented correctly, they improve user experience and maintain unified search engine rankings for each market.

Language & Region Pairs

Each localized page should specify its language and target region using ISO codes (e.g., en-us, fr-ca, es-es).

Every hreflang tag must include:

- Self-referencing link (the page refers to itself).

- Reciprocal links between all language versions.

- x-default tag for fallback when no language match exists.

Example markup:

Use consistent annotation either in HTML <head>, HTTP headers, or XML sitemaps—never a mix across the same set.

Site Structure Options

The structure you choose defines maintenance complexity and authority flow.

| Structure | Example | Pros | Cons |

| ccTLDs | example.fr, example.de | Strong regional signal | Costly; authority split across domains |

| Subdomains | fr.example.com | Easier to manage; flexible | Slightly weaker geo signal |

| Subfolders | example.com/fr/ | Consolidates authority; simplest to scale | Requires strong internal governance |

For most organizations, subfolders offer the best balance between SEO performance and maintainability.

GAP TO COVER: Mixed Hreflang and Canonical Rules

Conflicts between hreflang and canonical tags are a common cause of failed localization.

Remember: the canonical URL tells Google which version is “preferred,” while hreflang tells it which is “relevant” for each region. These signals must complement each other.

Rules to avoid conflicts:

- Each localized page should canonicalize to itself, not to another language version.

- Hreflang annotations should include every alternate version, including self-reference.

- If using regional canonicals (e.g., /en-gb/), ensure all hreflang entries match the canonical URLs exactly.

- Avoid dynamically changing canonicals by IP or session—use static, language-based mappings.

Testing through Google Search Console’s International Targeting Report helps identify mismatched or missing hreflang pairs early.

Logs, Monitoring & Alerting (Often Missed)

Consistent monitoring ensures your technical SEO foundation stays healthy long after implementation. Regular analysis prevents crawl waste, indexing errors, and unnoticed performance drops.

Server Log Analysis

Review server logs monthly to confirm:

- Real bot activity (Googlebot, Bingbot, etc.) vs fake crawlers.

- Crawl budget waste on non-indexable pages or parameters.

- Missed sections—important pages not being crawled.

Tools like Screaming Frog Log Analyzer or Splunk help visualize trends and spot crawl gaps.

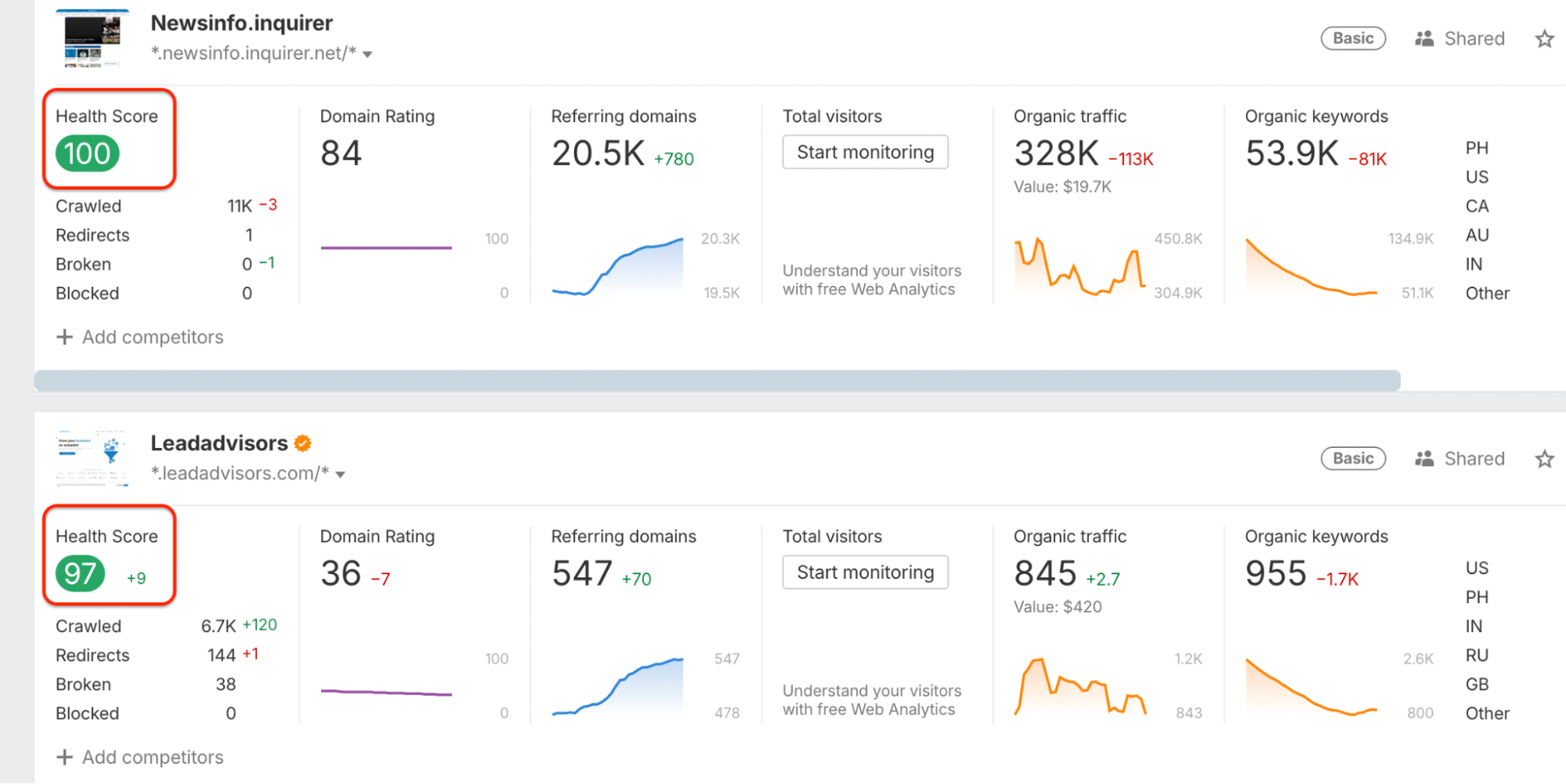

Health Dashboards

Track live site metrics through dashboards that combine:

- Google Search Console: Index coverage, crawl stats, CWV trends.

- PageSpeed Insights / Semrush: Performance and mobile usability.

- Ahrefs or Sitebulb: Broken links and redirect anomalies.

Quick visibility enables faster response to technical regressions.

Change Monitoring

Set alerts for critical shifts such as:

- New redirects or URL slug updates

- Canonical or robots.txt changes

- Sitemap drift—pages added or dropped unexpectedly

Proactive monitoring catches issues before they impact search engine rankings or crawl efficiency.

AI Search Readiness (Ahrefs Unique Angles + Our Upgrades)

| Focus Area | What It Means | Key Actions |

| Crawling by LLMs | Many AI crawlers don’t render JavaScript, limiting what they “see.” | • Keep critical copy & links HTML-visible. • Review Cloudflare/WAF settings for AI crawler access. • Decide your allow/block policy intentionally. |

| LLMs.txt | A proposed control file (like robots.txt) for AI crawlers, but with weak adoption. | • Use only if aligned with your business or legal stance. • Treat it as a declaration, not enforcement. • Keep consistent with robots.txt and TOS. |

| Hallucinated URLs | AI tools may cite non-existent or outdated URLs, causing 404s. | • Detect via Ahrefs or server logs. • 301 redirect to best live equivalents. • Create pattern-based catch-alls for false URLs. |

| Content Provenance | Authenticity matters—avoid AI “fingerprints” or unclear authorship. | • Remove metadata left by AI tools or plugins. • Add Organization + Person schema. • Maintain verified human-edited content. |

| IndexNow (AIOSEO) | Protocol for fast URL discovery by Bing, Yandex, and others. | • Enable IndexNow in AIOSEO. • Ideal for frequently updated or large sites. • Use alongside XML sitemaps for real-time updates. |

Platform-Specific Playbooks (Brief, Actionable)

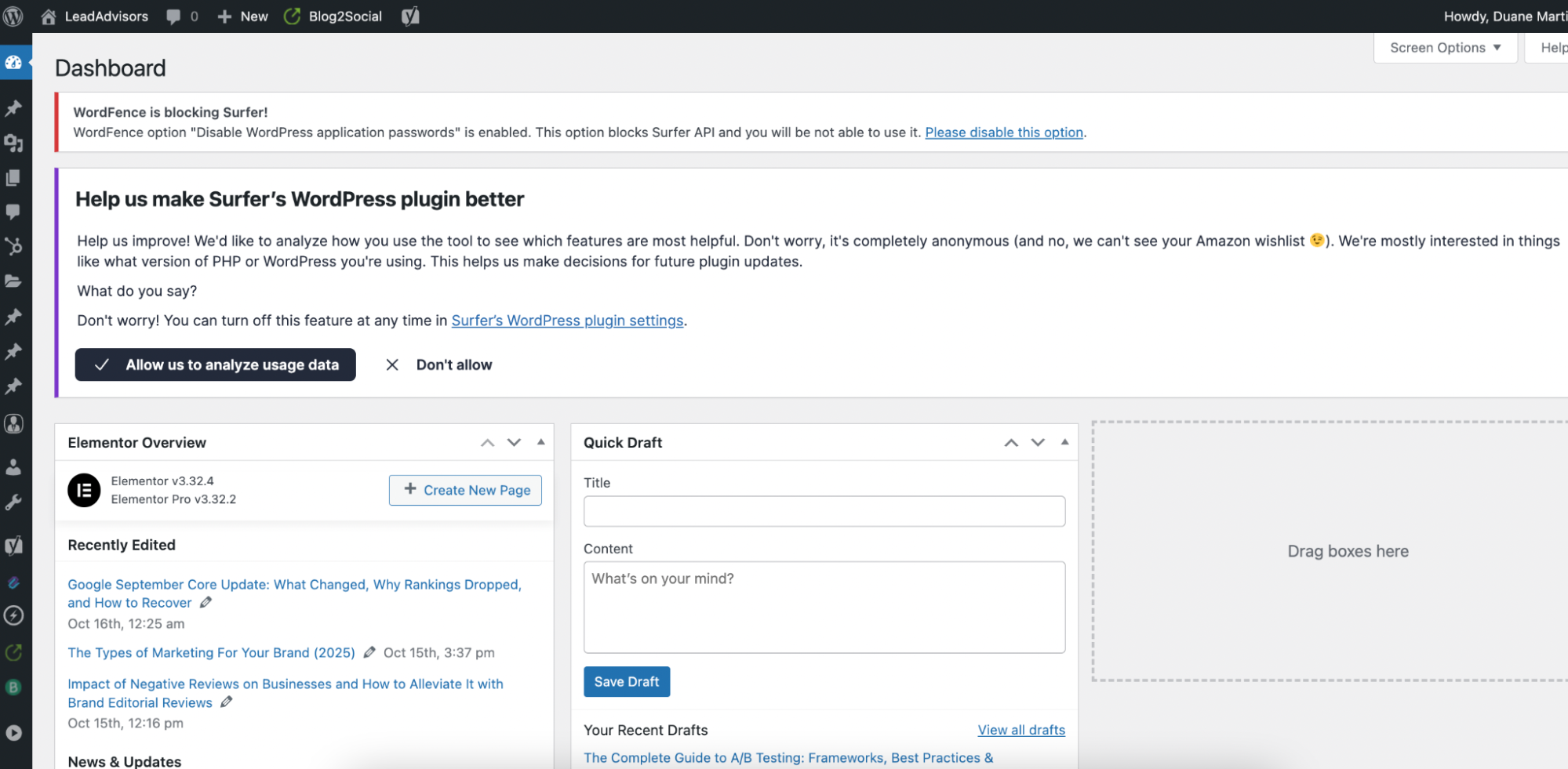

WordPress

WordPress offers flexibility but can easily create technical SEO clutter without proper controls. Start by installing core tools such as AIOSEO, Redirection, and a schema plugin to manage metadata, sitemaps, and canonical tags efficiently. Keep your robots.txt file simple—block /wp-admin/ and internal search pages but allow essential assets.

Avoid indexing thin content like tag archives or media attachment pages, redirecting them instead to the main post or relevant category. Stick to one sitemap source and clean permalink structures for consistency. Regularly audit canonicals and sitemap updates in Google Search Console to ensure index integrity and prevent duplicate content issues.

Shopify / Headless (Next.js, Nuxt, etc.)

Shopify and headless frameworks demand special attention to rendering and crawlability. Shopify’s Liquid templates often generate unnecessary parameters or duplicate URLs—canonicalize where needed and clean URL patterns frequently.

For headless sites, ensure that Server-Side Rendering (SSR) or Incremental Static Regeneration (ISR) is usedto provide HTML content visible to crawlers. Avoid overusing app-generated JavaScript that hides text or links, as most bots don’t execute complex scripts. Pre-render critical product and collection pages and verify their HTML output using Google Search Console and PageSpeed Insights.

Technical SEO Tools Stack (Balanced View)

A strong technical SEO stack blends analytics, crawling, and monitoring tools to give both visibility and control. The goal is balance—combining real-world performance data with scalable diagnostics.

Core Platforms

Start with the essentials: Google Search Console and Bing Webmaster Tools for index coverage, structured data validation, and international targeting insights. Utilize PageSpeed Insights and Core Web Vitals reports to assess real-world user performance across variousdevices.

Crawling & Diagnostics

For deep audits, pair lightweight and enterprise tools:

- Ahrefs (AWT): Quick backlink checks and index insights.

- Screaming Frog: Local crawl control, custom extraction, and redirect mapping.

- Sitebulb and Deepcrawl: Scalable visual audits for larger architectures.

Log Analysis

Use Screaming Frog Log Analyzer or the ELK stack (Elasticsearch, Logstash, Kibana) to understand how search bots crawl your site, identify crawl waste, and confirm priority page access.

WordPress Toolkit

For WordPress-based environments, AIOSEO centralizes core tasks, including robots management, XML sitemaps, redirects, and schema markup. Complement with Broken Link Checker for maintaining internal and external links.

Monitoring & Change Detection

Maintain visibility over uptime and site changes through automated systems:

- Uptime monitors for availability tracking.

- Sitemap diffing or Git hooks to detect URL or canonical shifts before they affect indexing.

Governance: Processes That Keep You Clean

Effective technical SEO relies on ongoing discipline, not one-time fixes. A solid governance process ensures every release, content update, and technical change maintains crawl efficiency and site health.

Before launch, teams should confirm

- Correct canonicals

- Robots directives

- Schema markup

- Sitemap inclusion.

Weekly sweeps identify 404 errors, redirect chains, and shifts in indexable pages, while monthly reviews focus on Core Web Vitals and image optimization to prevent performance degradation.

Quarterly log and crawl-budget analyses verify that search engines focus on priority URLs and not waste crawl capacity. Together, these recurring checks form a continuous improvement loop—keeping your site clean, fast, and fully aligned with evolving SEO standards.

Frequently Asked Questions

Does blocking in robots.txt stop indexing?

Should I use rel=prev/next?

Is duplicate content a penalty?

What Core Web Vitals threshold really matters?

Do 301 redirects pass PageRank?

Is schema markup really necessary for SEO?

Conclusion

Strong technical SEO turns your website into a reliable, crawl-efficient system built for lasting visibility. When architecture, speed, structure, and governance align, search engines can accurately interpret and rank your content.

Regular audits and automation ensure consistent performance, while clean code and structured data prepare your site for both traditional and AI-driven discovery. Ultimately, effective technical SEO works quietly—enabling higher rankings, faster access, and a more substantial user experience.